Multiple Comparisons (Post Hoc Testing)

Whenever a statistical test concludes that a relationship is significant, when, in reality, there is no relationship, a false discovery has been made. When multiple tests are conducted this leads to a problem known as the multiple testing problem, whereby the more tests that are conducted, the more false discoveries that are made. For example, if one hundred independent significance tests are conducted at the 0.05 level of significance, we would expect that if in truth there are no real relationships to be found, we will, nevertheless, incorrectly conclude that five of the tests are significant (i.e., 5 = 100 × 0.05).

Worked example of the multiple comparison problem

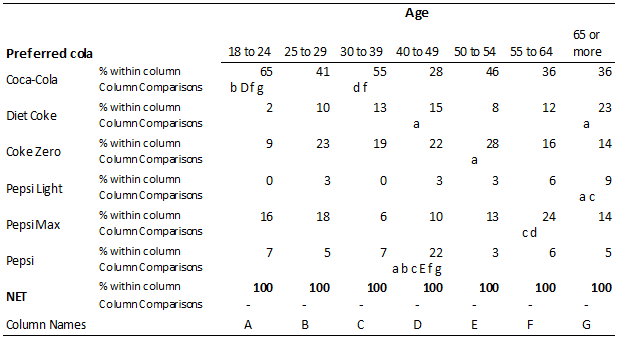

The table above shows column comparisons testing for differences in cola preference amongst different age groups. A total of 21 significance tests have been conducted in each row (i.e., this is the number of possible pairs of columns). The first row of the table shows that there are six significant differences between the age groups in terms of their preferences for Coca-Cola. The table below shows the 21 p-values.

| 18 to 24 | A | |||||||

| 25 to 29 | B | .0260 | ||||||

| 30 to 39 | C | .2895 | .1511 | |||||

| 40 to 49 | D | .0002 | .2062 | .0020 | ||||

| 50 to 54 | E | .0793 | .6424 | .3615 | .0763 | |||

| 55 to 64 | F | .0043 | .6295 | .0358 | .4118 | .3300 | ||

| 65 or more | G | .0250 | .7199 | .1178 | .5089 | .4516 | .9767 | |

| A | B | C | D | E | F | G | ||

| 18 to 24 | 25 to 29 | 30 to 39 | 40 to 49 | 50 to 54 | 55 to 64 | 65 or more |

If testing at the 0.05 level of significance we would anticipate that when conducting 21 tests we have a non-trivial chance of getting a false discovery by chance alone. If it is the case that, in reality, there is no real difference between the true proportions, we would expect that with 21 tests at the 0.05 level we will get an average of 21 × 0.05 = 1.05 to be significant (i.e., 0, 1, 2, with decreasing probabilities of higher results).

The cause of the problem relates to the underlying meaning of the p-value. The p-value is defined as the probability that we would get a result as a big or bigger than the one observed if, in fact, there is no difference in the population (see Formal Hypothesis Testing). When we conduct a single significance test, our error rate (i.e., the probability of false discoveries) is just the p-value itself[note 1]. For example, if we have a p-value of 0.05 and we conclude it is significant the probability of a false discovery is, by definition, 0.05. However, the more tests we do, the greater the probability of false discoveries.

If we have two unrelated tests and both have a p-value of 0.05 and are concluded to be significant, we can compute the following probabilities:

- The probability that both results are false discoveries is 0.05 × 0.05 = 0.0025.

- The probability that one but not both of the results is significant is 0.05 × 0.95 + 0.95 × 0.05 = 0.095.

- The probability that neither of the results is a false discovery is 0.95 × 0.95 = 0.9025.

The sum of these first two probabilities, 0.0975, is the probability of getting 1 or more false discoveries if there is no actual differences. This is often known as the familywise error rate. If we do 21 independent tests at the 0.05 level, our probability of one or more false discoveries jumps to 1 - (1 - 0.05)21 ≈ 0.58. Thus, we have a better than even chance of obtaining a false discovery on every single row of a table such as the table shown above when doing column comparisons. The consequence of this is dire, as it implies that many and perhaps most statistically significant results may be false discoveries.

Various corrections to statistical testing procedures have been developed to minimize the number of false discoveries that occur when conducting multiple tests. The next section provides an overview of some of the multiple comparison corrections. The section after that lists different strategies for applying multiple comparison corrections to tables.

Multiple comparison corrections

Many corrections have been developed for multiple comparisons. The simplest and most widely known is the Bonferroni correction. Its simplicity is not a virtue and it is doubtful that the Bonferroni correction should be widely used in survey research.

Numerous improvements over the Bonferroni correction were developed in the middle of the 20th century. These are briefly reviewed in the next sub-section. Then, the False Discovery Rate, which dominates most modern research in this field, is described.

Bonferroni correction

As computed above, if we are conducting 21 independent tests and the truth is that the null hypothesis is true in all instances, when we test at the 0.05 level we have a 58% chance of 1 or more false discoveries. Using the same maths, but in reverse, if we wish to reduce the probability of making one or more false discoveries to 0.05 we should conduct each test at the level of significance.

The Bonferroni inequality is an approximate formula for doing this computation. The approximation is that , and thus in our example, (where is the significance level and is the number of tests).

The Bonferroni correction involves testing for significance using the significance level for each test of . Thus, returning to the table of p-values above, only two of the pairs have p-values less than or equal to the new cut-off of 0.00244, A-D and C-D, so these are concluded to be statistically significant.

As mentioned above, while the Bonferroni correction is simple and it certainly reduces the number of false discoveries, it is in many ways a poor correction.

Poor power

The Bonferroni correction results in a large reduction in the power of statistical tests. That is, because the cut-off value is reduced, it becomes substantially more difficult for any result to be concluded as being statistically significant, irrespective of whether it is true or not. To use the jargon, the Bonferroni correction results in a loss of power (i.e., the ability of the test to reject the null hypothesis when it is false declines). In a sense this is a necessary outcome of any multiple comparison correction as the proportion of false discoveries and the power of a test are two sides of the same coin. However, the problem with the Bonferroni correction is that it loses more power than any of the alternative multiple comparison corrections and this loss of power does not confer any other benefits (other than simplicity).

Counterintuitive results

Consider a situation where 10 tests have been conducted and each has a p-value of 0.01. Using the Bonferroni correction with a significance level of 0.05 results in all the ten tests being concluded as not being statistically significant. That is, the corrected cut-off for concluding statistical significance is 0.05 / 10 = 0.005 and 0.01 > 0.005.

When we do lots of tests we are guaranteed to make a few false discoveries if the truth is that there there are no real differences in the data (i.e., the null hypothesis is always true). The whole logic is one of relatively rare events - that is the false discoveries - inevitably occurring because we conduct so many tests. However, in the example of 10 tests where all have a p-value of 0.01 it seems highly unlikely that the null hypothesis can be true in all of these instances and yet this is precisely the conclusion that the Bonferroni correction leads to.

In practice, this problem does occur regularly. The false discovery rate, which is described later, is a substantial improvement over the Bonferroni correction and it is discussed below.

Traditional non-Bonferroni corrections

Numerous alternatives have been developed to the Bonferroni correction. Popular corrections include Fisher LSD, Tukey's HSD, Duncan’s New Multiple Range Test and Newmann-Keulls (S-N-K).[1] The different corrections often lead to different results. With the example we have been discussing, Tukey's HSD finds two comparisons significant, Newmann-Keulls (S-N-K) finds three, Duncan’s New Multiple Range Test finds four and and Fisher LSD finds six.

In general, these tests have superior power to the Bonferroni correction. However, most of these tests are only applicable when comparing one numeric variables between multiple independent groups. That is, while the Bonferroni correction assumes independence, it can still be applied when this assumption is not met. However, most of the traditional alternatives to the Bonferroni correction can only be computed when comparing one numeric variable between multiple groups. Although these traditional corrections can be modified to deal with comparisons of proportions, they generally cannot be modified to deal with multiple response data and they are thus inapplicable to much of survey analysis. Furthermore, these corrections can generally not be employed with cell comparisons. Consequently, these alternatives tend only to be used in quite specialized areas (e.g., psychology experiments and sensory research), as in the more general survey analysis there is little merit in adopting a multiple comparison procedure that can only be used some of the time.

False Discovery Rate correction

The Bonferroni correction, which uses a cut-off of α / m where α is the significance level and m is the number of tests, is computed using the assumption that that the null hypothesis is correct for all the tests. However, when the assumption is not met, the correction itself is incorrect.

The table below orders all of the p-values from lowest to highest. The most smallest p-value is 0.0002 and this is below the Bonferroni cut-off of 0.00244 so we conclude it is significant. The next smallest p-value of 0.0020 is also below the Bonferroni cut-off so it is also concluded to be significant. However, the third smallest p-value of 0.0043 is greater than the cut-off which means that when applying the Bonferroni correction we conclude that it is not significant. However, this is faulty logic. If we believe that the first two smallest p-values indicate that the differences being tested are real, we have already come to a conclusion that the underlying assumption of the Bonferroni correction is false (i.e., we have determined that we do not believe that the null hypothesis is true for the first two corrections). Consequently, when computing the correction we should at the least ignore the first two comparisons, resulting in a revised cut-off of 0.05/(21-2)=0.00263.

| Comparison | p-Value | Test number | Cut-offs | Naïve significance | FDR significance | Bonferroni significance |

|---|---|---|---|---|---|---|

| D-A | .0002 | 1 | .0024 | D-A | D-A | D-A |

| D-C | .0020 | 2 | .0048 | D-C | D-C | D-C |

| F-A | .0043 | 3 | .0071 | F-A | F-A | |

| G-A | .0250 | 4 | .0095 | G-A | ||

| B-A | .0260 | 5 | .0119 | B-A | ||

| F-C | .0358 | 6 | .0143 | F-C | ||

| E-D | .0763 | 7 | .0167 | |||

| E-A | .0793 | 8 | .0190 | |||

| G-C | .1178 | 9 | .0214 | |||

| C-B | .1511 | 10 | .0238 | |||

| D-B | .2062 | 11 | .0262 | |||

| C-A | .2895 | 12 | .0286 | |||

| F-E | .3300 | 13 | .0310 | |||

| E-C | .3615 | 14 | .0333 | |||

| F-D | .4118 | 15 | .0357 | |||

| G-E | .4516 | 16 | .0381 | |||

| G-D | .5089 | 17 | .0405 | |||

| F-B | .6295 | 18 | .0429 | |||

| E-B | .6424 | 19 | .0452 | |||

| G-B | .7199 | 20 | .0476 | |||

| G-F | .9767 | 21 | .0500 |

While the cut-off of 0.00263 is more appropriate than the cut-off of 0.00244, it is still not quite right. The problem is that in assuming that we have 21 - 2 = 19 comparisons where the null hypothesis is true, we are forgetting that we have already determined that 2 of the tests are significant. This information is pertinent in that we if we believe that at least 2/21 tests are significant then it is overly pessimistic to base a computation of the cut-off rate for the rest of the tests on the assumption that the null hypothesis is true in all instances.

It has been shown that an appropriate cut-off procedure, which is referred to as the False Discovery Rate correction is:[2]

- Rank order all the tests from by smallest to largest p-value.

- Apply a cut-off based on the position in the rank-order. In particular, where i denotes the position in the rank order, starting from 1 for the most significant result and going through to m for the least significant result, the cut-off should be at least as high as α × i / m.

- Use largest significant cut-off as the overall cut-off. That is, it is possible using this formula that the smallest p-value will be bigger than the cut-off but that some other p-value be less than or equal to the cut-off (because the cut-off is different for each p-value. In such a situation, the cut-off that is largest is then re-applied to all the p-values.

Thus, returning to our third smallest p-value of 0.0043, the appropriate cut-off becomes 0.05 × 3 / 21 = 0.007143 and thus the third comparison is concluded to be significant. Thus, the false discovery rate leads to more results being significant than with the Bonferroni correction.

Some technical details about False Discovery Rate corrections

- The False Discovery Rate correction is a much newer technique than the traditional multiple comparison correction techniques. In the biological sciences, which is at the heart of much of modern statistical testing, the FDR is the default approach to dealing with multiple comparisons. However, the method is stull relatively unknown in survey research. Nevertheless, there is good reason to believe that the false discovery rate is superior. Where in survey research we have no real way of knowing when we are correct, in the biological sciences they often reproduce their studies and the popularity of the false discovery rate approach is presumably attributable to its accuracy.

- The above description states that "the cut-off should be at least as high" as that suggested by the formula. It is possible to calculate a more accurate cut-off,[3] but this entails a considerable increase in complexity. The more accurate cut-offs are always greater than or equal to the one described above and thus have greater power.

- In the discussion of the Bonferroni correction it was noted that with 10 tests each with a p-value of 0.04, none are significant when testing at the 0.05 level. By contrast, with the False Discovery Rate correction, all are found to be significant (i.e., the False Discovery Rate correction is substantially more powerful than the Bonferroni correction).

- The False Discovery Rate correction has a slightly different object to the Bonferroni correction (and other traditional corrections). While the Bonferroni correction is focused on trying to reduce the familywise error rate, the False Discovery Rate correction seeks to try and reduce the rate of false discoveries. That is, when the cut-off of α × i / m is used it is guaranteed that the at most α × 100% of discoveries will be false. Thus, α is really the false discovery rate rather than the familywise error rate and for this reason it is sometimes referred to as q to avoid confusion. There are no hard and fast rules about what value of q is appropriate. It is not unknown to have it as high as 0.20 (i.e., meaning that 20% of results will be “false”).

- Although the False Discovery Rate correction is computed under an assumption that the tests are independent, the approach still works much better than either traditional testing or the Bonferroni correction when this assumption is not met, such as when conducting pairwise comparisons[4]

Strategies for applying multiple comparison corrections to tables

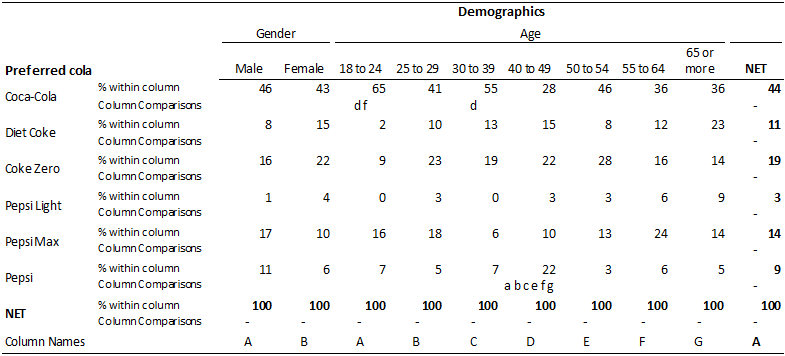

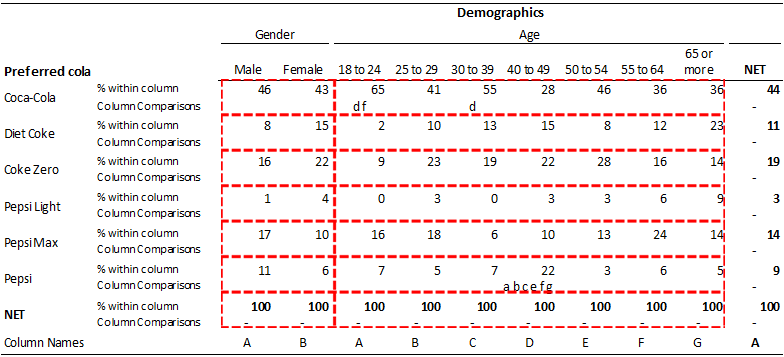

The table below shows a crosstab involving a banner that has both age and gender. When applying multiple comparison corrections a decision needs to be made about which tests to use in any correction. For example, if investigating the difference in preference for Coca-Cola between people aged 18 to 24 and those aged 55 to 64 we can:

- Ignore all the other tests and employ the Formal Hypothesis Testing approach.

- Only take into account the other 20 comparisons of preference for Coca-Cola by age (as done in the above examples). Thus, 21 comparisons are taken into account.

- Take into account all the comparisons involving Coca-Cola preference on this table. As there is also a comparison between gender, this involves 22 different comparisons.

- Take into account all the comparisons involving cola preferences and age. As there are six colas and 21 comparisons within each this results in 126 comparisons.

- Take into account all the comparisons on the table (i.e., 132 comparisons).

- Take into account all the comparisons involving Coca-Cola preference in the entire study (e.g., comparisons by income, occupation, etc.).

- Take into account all the comparison of all the cola preferences in the entire study.

- Take into account all the comparisons of any type in the study.

Looking at the Bonferroni correction (due to its simplicity), we can see that a problem we face is that the more comparisons we make the smaller the cut-off level becomes. When we employ Formal Hypothesis Testing the cut-off is 0.05, when we take into account 21 comparisons the cut-off drops to 0.05 / 21 = 0.0024. When we take into account 132 comparisons the cut-off drops to 0.0004. With most studies, such small cut-off results can lead to few and sometimes no results being marked as significant and thus the cure to the problem of false discoveries become possibly worse than the problem itself.

The False Discovery Rate correction is considerably better in this situation because the cut-off is computed from the data and if the data contains lots of small p-values the false discovery rate can identify lots of significant results, even in very large numbers of comparisons (e.g., millions). Nevertheless, a pragmatic problem is that if wanting to take into account comparisons that occur across multiple tables then one needs to work out which tables will be created. This can be practical in situations where there is a finite number of comparisons of interest (e.g., if wanting to compare everything by demographics). However, it is quite unusual that the number of potential comparisons of interest is fixed and, as a result, it is highly unusual in survey research to attempt to perform corrections for multiple comparisons across multiple tables (unless testing for significance of the entire table, rather than of results within a table).

The other traditional multiple comparison corrections, such as Tukey HSD and Fisher LSD, can only be computed in the situation involving comparisons within a row for independent sub-groups (i.e., the situation used in the worked examples above involving 21 comparisons).

Within survey research, the convention with column comparisons is to perform comparisons within rows and within sets of related categories, as shown on the table below. Where a multiple comparison correction is made for cell comparisons the convention is to apply the comparison to the whole table (although this is a weak convention, as corrects for cell comparisons are not common). The difference between these two approaches is likely because the cell comparisons are generally more powerful and thus applying the correction to the whole table is not as dire.

Software comparisons

Most programs used for creating tables do not provide multiple comparison corrections. Most statistical programs do provide multiple comparisons corrections, however, they are generally not available as a part of any routines used for creating tables. For example, R contains a large number of possible corrections (including multiple variants of the False Discovery Rate correction), but they need to be programmed one-by-one if used for crosstabs and there is no straightforward way of displaying their results (i.e., R does not create tables with either Cell Comparisons or Column Comparisons).

| Package | Support for multiple comparison corrections |

|---|---|

| Excel | No support. |

| Q | Can perform False Discovery Rate correction on all tables using Cell Comparisons and/or Column Comparisons and can apply a number of the traditional corrections (Bonferroni, Tukey HSD, etc.) on Column Comparisons of means and proportions. Corrections can be conducted for the whole table, or, within row and related categories (Within row and span to use the Q terminology). |

| SPSS | Supports most traditional corrections as a part of its various analysis of variance tools (e.g., Analyze : Compare Means : One-Way ANOVA : Post Hoc), but does not use False Discovery Rate corrections. Bonferroni corrections can be placed on tables involving Column Comparisons in the Custom Tables module. |

| Wincross | For crosstabs involving means can perform a number of the traditional multiple comparison corrections. |

Also known as

- Post hoc testing

- Multiple comparison procedures

- Correction for data dredging

- Correction for data snooping

- Correction for data mining (this usage is very old-fashioned and predates the use of data mining techniques).

- Multiple hypothesis testing

See also

- Formal Hypothesis Testing

- The Role of Statistical Tests in Survey Analysis

- Statistical Tests on Tables

- Why Significance Test Results Change When Columns Are Added Or Removed

Notes

References

Cite error: <ref> tags exist for a group named "note", but no corresponding <references group="note"/> tag was found

- ↑ Hochberg, Y. and A. C. Tamhane (1987). Multiple Comparison Procedures. New York, Wiley.

- ↑ Benjamini, Y. and Y. Hochberg (1995). "Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testings." Journal of the Royal Statistical Society - Series B 57(1): 289-300.

- ↑ Story, J. D. (2002). "A direct approach to false discovery rates." Journal of the Royal Statistical Society, Series B (Methodological) 64 (3): 479–498 64(3): 479-498.

- ↑ Yoav Benjamini (2010): Discovering the false discovery rate, J. R. Statist. Soc. B (2010), 72, Part 4, pp. 405–416.